Everyone knows the one thing you shouldn’t predict about AI is the timelines. So let’s predict AI timelines!

Is AI the real deal?

Yes.

How big a deal?

A very big deal.

That’s not very helpful. Maybe quantify that a bit?

This will have real impact on people’s lives. The media periodically gets overexcited about nothingburgers like 5G or the metaverse. This isn’t one of those. Though I think almost everyone knows this by now.

If AI progress stops where it is today (Feb 2024), at the level of GPT-4 and Midjourney 6, we have the ingredients of a typical tech revolution, roughly on par with the introduction of smartphones. The impact would show up over the next ten years, as AI is integrated into products and diffuses out to the wider economy. The way this all plays out is very familiar to everyone.

If AI keeps improving at the current pace, we have a very different scenario. For a good historical comparison, we might need to reach back to the invention of the steam engine, or even all the way back to the emergence of homo sapiens. Everything depends on where AI progress tops out, and when.

So will recent AI progress stop, or keep going?

The short term

In the short term (~2 years), further progress is all but certain. New models are in the pipeline, being prepared for release. Big tech is investing like crazy, with a large amount of compute just starting to come online. Earnings calls are full of CFOs flagging further heavy investment to come. On the algorithmic side, the current generation of models have many obvious gaps that are going to get filled. The one potential speed bump is that we’re close to using all of the publicly available training data in some domains, but it probably won’t halt progress in the short term. A sceptic could also point to Google’s recent Gemini Ultra model being only on par with GPT-4, despite coming a year later and with enormous effort. But weighing everything together, continued progress seems very likely in the near future.

The medium term

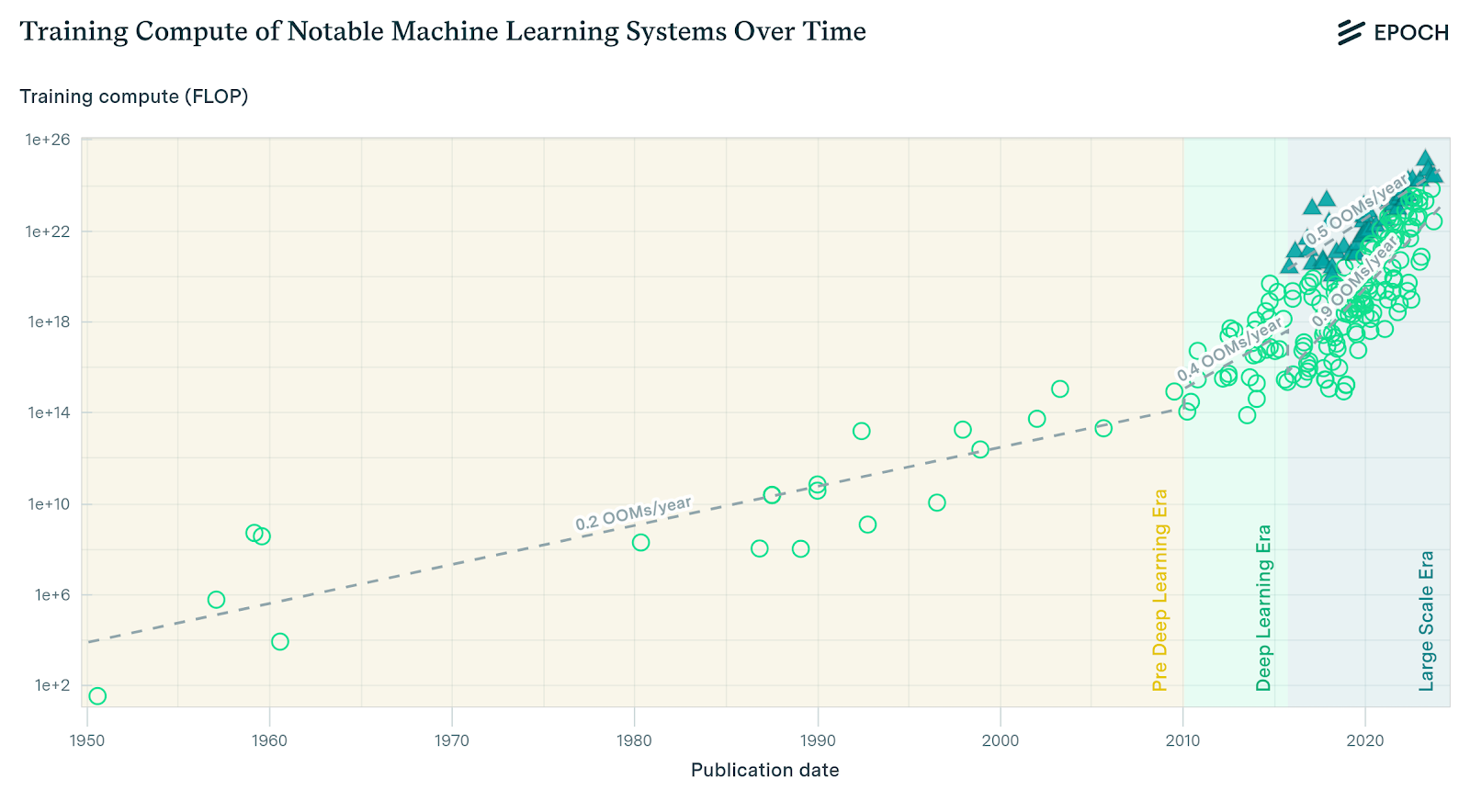

The medium term (~10 years) is the hardest to predict. Everything hangs on whether AI reaches a critical threshold of capability before the training costs become too high to afford. Recent AI progress is not happening at a natural pace, where deployed compute increases in line with Moore’s Law. Instead we are aggressively pulling forward the future by throwing larger and larger amounts of money at the problem. This has dramatically bent the compute curve and delivered spectacular advances, but the dollar cost of training frontier models has been rising by 10x every 2 years. Clearly that can’t continue for long.

The largest models are now eye-wateringly expensive to train. GPT-4 training cost more than $100m. Meta recently spent ~$9B on Nvidia chips for training next generation models. Even for big tech, this is a lot. These companies have cash on hand of $50B – $150B, and annual incomes of $30B – $100B, but shareholders are likely to rebel before investment gets anywhere close to those ceilings. Normal commercial efforts cannot sustain the extreme recent trends in AI compute for more than a handful of years.

It’s possible things move beyond the realm of commercial efforts. Governments clearly (and correctly) see AI as a strategic technology, and the US has already made aggressive moves to stay ahead. Great power competition can unlock maybe another one or two orders of magnitude budget. Historical efforts such as the Manhattan Project cost $25B (inflation adjusted), the Apollo Program cost $280B, and the Inflation Reduction Act contains around $400 billion of green energy subsidy. OpenAI has been talking about a need to spend $7T (trillion!) for AI-related chip manufacture and power infrastructure. For context, global GDP is $100T.

All the money in the world cannot outrun an exponential for very long. If we take GPT-4 as a baseline, costing $100m in 2023, and maintain the trend of 10x cost increase every 2 years, individual model training costs exceed $1T by 2031, and world GDP shortly after that. So OpenAI is not making up big numbers for no reason. But it’s not even clear you could deploy capital that fast, before other constraints bite harder (e.g. time required to build data centers, skilled labour to expand chip manufacturing, etc).

And what does such enormous investment buy you? If we pursue pure scaling, the Chinchilla law says that modelling loss halves for every 100x increase in training compute. What this actually means in terms of qualitative model capabilities is very hard to say. There are also likely to be algorithmic advances, but the impact of that is even harder to predict. In short, it’s hard to know.

One thing we can say confidently is that we’re approaching a critical point, and it’s no more than a few years ahead. The dollar scaling that has driven recent gains can’t keep going. Either AI delivers a significant capability unlock before the money runs out, or progress stalls. In the latter case, we’ll stay in that state possibly for decades1 until Moore’s Law catches up, or there’s a big algorithmic breakthrough.

The long term

In the long term (i.e. by the end of the century), these contingent timeline issues should average out, and transformational change seems very likely. I would bet heavily on AI exceeding human performance on essentially every task of relevance before 2100. This will be the most important event of the last hundred millenia, and children being born today will be around to see it. Whether it’s a positive or negative event is a topic for another post.

I don’t buy it. Any prediction for the end of the century is almost certain to be wrong. The next few years are a lot more relevant, and you gave a hedged answer there.

Big changes can sometimes be predictable far in advance, at least in broad brush outline. Climate change is an example. But OK, let’s focus on the near future. How far can AI get before the money runs out, which probably means the next 10-15 years?

Here are a few scenarios:

The Damp Squib

Scenario: AI progress stalls while it’s still basically useless.

Tools like GTP-4 and Midjourney remain mostly a curiosity, not reliable enough for serious work. There are a few niches of real utility, but it has negligible effect on the wider economy. After the hype passes, no company generates >$1B in revenue from AI, and it doesn’t form part of our daily lives. AI investment tanks, and the technology stays stuck at the current level for another decade or two.

Comment: This was plausible even two or three years ago, but seems more and more unlikely by the day. I think we’re almost past the point where this is even possible.

Probability: 10%

The Typical Cycle

Scenario: AI improves a bit beyond the current level, but then hits a wall and further progress is slow.

We get a technology revolution that’s similar in magnitude to the rise of the internet or the smartphone. The media seems to talk about nothing else. AI amplifies human abilities for many aspects of white collar work, and provides full automation of a handful of job categories. Previously highly skilled tasks like media creation and coding become approachable to a much wider audience, at least for simpler projects. It’s possible to entirely delegate some chores to AI agents, for example travel planning. There are brand new capabilities in bioinformatics and materials science. In the physical world, autonomous vehicles gradually spread into common usage. Robotics makes a dent in manufacturing, logistics, and farming, but many processes still require a human. Over a trillion dollars of market cap is attributable to AI-based products (most of it in existing companies, but at least a few hundred billion from newly IPO’d startups).

This is all significant, but the scale and pace of change is no different to other technology shifts from the last few decades. The jump from 1970 to 2035 is less than the jump from 1870 to 1935. Everything is still pretty familiar, just with a few improvements. It’s not the wholesale transformation of the early 20th century driven by the advent of cars, electricity, radio, telephones, aviation, cinema and home appliances.

Comment: This assumes we refine everything that’s currently half baked, but there are no major surprises to come. It’s a plausible base case. Things will definitely go further than this in time, but not necessarily without another AI winter in between.

Probability: 40%

The Jetsons Pause

Scenario: AI continues to improve rapidly, but stalls just a little short of human level.

We have a historic social transformation, on the scale of the Industrial Revolution. AI can do the vast majority of the work for most white collar jobs, and we can entirely delegate complex pieces of work to AI agents. But social changes take time. Productivity rises rapidly in the areas where the social friction is lowest, but human factors greatly slow down the change elsewhere.

In large white-collar organizations, most of the work of junior employees is gradually absorbed by AI. The junior ranks shrink over time, via reduced hiring rather than mass layoffs. Senior employees, whose work is internal political battles, reviews, and strategic decision making, are relatively unaffected.

Guild professions retain their legal chokehold over key actions. AI can diagnose you, but only a doctor can write you a prescription. AI can do your accounts, but only an accountant can sign your audit. Only a lawyer can represent you in court. The ranks of junior accountants and lawyers thin greatly, but the profession as a whole successfully resists radical transformation.

A new breed of hyperproductive startups and SMBs emerge that take full advantage of AI, with no historical baggage. Tiny teams and single individuals, assisted by large numbers of AI agents, can do work that was previously the domain of major corporations. We see the rise of the solopreneur billionaire, and the hit movie with one name in the credits. This model of production gradually out-competes the old guard, at least everywhere regulation permits, but it takes decades.

In lower paid jobs, the story is a bit different. Employees have less power to resist change, so the transformation progresses rapidly in some areas. Customer support and call centers are largely automated within a decade. But jobs with face-to-face human interaction, such as shop assistants, waiters and child care, are relatively unaffected. Physical work of all kinds is also slow to change. Meanwhile, wages rise across the board via the Baumol effect.

Robotics lags behind pure AI, because hardware is hard and less training data is available, but the lag is less than a decade. Automation occurs roughly in order of the manual dexterity required. Some jobs such as driving, warehouse work and agricultural labour are automated within ten to fifteen years. More dexterous work such as construction, cooking and household chores, take longer. But over the next two to three decades essentially everything is automated. Autonomous vehicles and home service robots are the biggest change to domestic life since the early 20th century.

In manufacturing, there is a large hit to the developing world, as labour cost advantages become less important. Most factories become “dark” or close to it, with few human employees. There is a manufacturing renaissance in the West.

AI still has capability gaps – it sometimes makes odd mistakes, and for the highest end work the output lacks a certain spark. AI isn’t great at advancing the frontier of knowledge on its own. The very best writing, best engineering, best scientific research still requires humans, though with liberal AI collaboration.

Comment: I think we’d be very lucky to land in this scenario. Effectively we get compute governance by accident. We gain a lot of the benefits of AI, but pause just before the superhuman phase, which is likely to be the most disruptive and most dangerous. The world gets an extended period of time to adjust, and to work on AI safety with the best possible augmented tools.

It requires us to fortuitously run out of steam in a narrow band of the AI capability spectrum. But given where things are now, and the relatively short runway before model training costs become unaffordable, it doesn’t seem completely implausible. I think there is a decent chance we land here, though it’s finely balanced.

Would this scenario have much higher unemployment and social unrest due to the job market disruption? For the last few hundred years, new occupations have always emerged to absorb the labour that gets freed up. It doesn’t always happen instantly though, and there were rocky decades in some phases of the Industrial Revolution. It’s also possible that this time is fundamentally different, and we end up with a lot of people lacking a role in society. Personally, I think we will figure it out one way or another. If it doesn’t happen naturally, society has many levers it can pull. We could increase government make-work, or have longer periods of free education and earlier retirement, which amounts to a partial basic income by stealth. Or perhaps even full UBI. One way or another, I think society will find overt or covert ways to redistribute the gains from automation, at least sufficiently to prevent social breakdown.

Probability: 40%

The Future is Now

Scenario: AI reaches human level, and exceeds it shortly thereafter.

We have a full-on technological Singularity2. AI out-thinks and out-works humans. The highest quality output in every field is generated by AI, including in frontier scientific research.

Even with the arrival of superhuman intelligence, the world doesn’t change overnight. The initial period of this scenario is not so different from the previous one. Capacity constraints in the industrial base (e.g. chip fabs and power plants) provide some level of speed limit on the roll-out. Enabling technologies, such as robotics, lag behind by several years. Any new technology takes time to diffuse, no matter how transformative.

Many voices call for a slow-down for safety reasons, but the genie is out of the bottle at this point, and the race dynamics are strong. There are successful international agreements around AI safety, but there’s also a level of suspicion and great power competition. It’s obvious that those who don’t adopt AI will be out-competed by those who do. Some safeguards are put in place with positive effect, but there is no consensus for a long pause. Ultimately regulation doesn’t materially impact timelines.

In economic life, there are still many areas in which humans prefer to deal with other humans, sometimes because a human touch is genuinely valuable, and sometimes in the same way we value handmade goods today. Large parts of the current economy in fact remain in place, but a new and much larger AI-only economy grows up that routes around it. The legacy economy gradually withers, though it takes decades.

AI is now advancing the frontier of knowledge at pace, including AI technology itself, and things rapidly get exotic. How exactly is hard to say. Even superintelligent AI has limits, it is not a God. It cannot give you a precise 100 day weather forecast3 or solve the halting problem. But it dwarfs the most famous figures in history in every intellectual domain. It is better at science, at politics, at art, and by gigantic margins. A lifetime of work can be produced in days. The physical world still provides a speed limit on some scientific research – it takes time to fabricate equipment and collect data. But these limits are far above human level. Our technology landscape starts to change dramatically on short timescales.

The world is in a soft take-off, and anything beyond this point is hard to foresee.

Comment: It seems bonkers to be talking about this as something that could arrive in the next fifteen years. Indeed, it’s not my base case. But given where we are and the rates of progress, reaching such a tipping point seems possible. AI is not constrained to run on 20 watts and fit through the birth canal. It is undoubtedly going to exceed human capabilities at some point. I can see no in-principle reason to exclude it happening soon. Though I wouldn’t put all my chips on this.

Probability: 10%

These timelines just seem completely implausible to me.

In some ways timelines are a red herring. We might not reach AGI in the 2030s, maybe we go through multiple cycles of AI booms and busts first. Regardless, I think we will almost certainly reach AGI4 some time this century5.

A WW1 biplane, and an SR-71 Blackbird, less than fifty years apart.

Technological revolutions tend to take us further than we can imagine to begin with, and AI is clearly only at the beginning. We are in something like the biplane era of aviation. There is a lot more to come. Many of us, or our children, are going to live through it.

Maybe you’re totally wrong about all of this?

I’m almost certainly wrong about most of it. The future has a way of surprising us.

John von Neumann made some predictions about the path of atomic technology in 1955. He was at the heart of the field, and among the smartest people in history. Many of his predictions bear no resemblance to what actually happened.

I wouldn’t make any major life decisions on the basis of AI predictions. But it does seem like we are at a particularly interesting point in history. It’s a good time to pay close attention and react.

Even though von Neumann’s atomic predictions were wrong, one thing he says in the essay rings true to me: “Any attempt to find automatically safe channels for the present explosive variety of progress must lead to frustration”. What’s left to us then? “Apparently only … a long sequence of small, correct decisions.”

There’s no formula for safety, only vigilance6.

Footnotes

- One thing that we can probably rule out is an outcome like the Apollo Program, where we reach the moon and then never go back. AI systems are extremely expensive to train, but relatively cheap to run. So whatever point of capability we reach, we can continue to use. ↩︎

- Vernor Vinge famously quipped: “To the question, ‘Will there ever be a computer as smart as a human?’ I think the correct answer is, ‘Well, yes… very briefly'”. I think this is correct – once we get human level AI, superhuman AI will follow quickly. ↩︎

- Absent weather control. ↩︎

- Whether AGI would arrive this century used to be a point of debate, but I think is now almost a consensus. This article is a good on-the-record account of some of the views of prominent AI scientist.

Yann LeCun, Meta’s chief AI scientist, says “There is no question that machines will become smarter than humans—in all domains in which humans are smart—in the future,” says LeCun. “It’s a question of when and how, not a question of if.”

Geoff Hinton, the father of deep learning, says “I have suddenly switched my views on whether these things are going to be more intelligent than us. I think they’re very close to it now and they will be much more intelligent than us in the future”.

Andrew Ng, another prominent AI leader, in 2015 described AI safety as “like worrying about overpopulation on Mars”, and that AGI was “hundreds of years from now”. More recently he revised his timeline down to 30-50 years. ↩︎ - Assuming compute keeps increasing, which seems probable as far as I can tell. Even the foothills of AI provide enough economic utility to incentivize further chip development, and it seems like there is still plenty of room at the bottom, at least for AI-specific workloads. ↩︎

- Or to put it another way: attention is all you need :-) ↩︎

Comments are closed.